Observability Knowledge Graph

Compared to the old term “monitoring”, the new buzzword “observability” is not merely a marketing slogan. It does convey a new set of challenges with the cloud-native paradigm shift. This blog provides an engineer’s perspective on these challenges and why Asserts thinks Knowledge Graph has a lot of potential to make them more tractable.

What Matters For Observability

Many articles have explained the difference between monitoring and observability. As engineers, naturally, our focus is on tooling and solutions. From this perspective, monitoring is about tools that gather predefined sets of information about the state of each system element, while observability is about solutions that help us explore the monitoring output and discover problems we may not already know.

Architectural complexity

To reason about a system is complex. New tools like the ubiquitous Prometheus exporters have simplified the first part (monitoring) in the last decade, but the second part of the problem (observability) remains largely unaddressed. Modern cloud-native applications are complex on a different level, as they are built with countless 3rd-party components and run on virtualized infrastructure. The complexity for observability now comes not only from each element but also from their highly dynamic interactions.

Diverse data sources

Observability data have different data models. Metrics are time-series data, some of which have a predefined schema, while others have more flexible structures. Logs are more like free-form text, while traces are somewhere in-between. Different data models often require different technology stacks to store, index, and query, exacerbating the overhead of an observability infrastructure.

Although SaaS services can take away much of the infrastructure complexity, engineers still have to deal with multiple products with different query syntax. Some try to unify the query languages, but that often leads to a lowest-denominator syntax with limited functionality and scalability. Some try to present these heterogeneous data with a single pane of glass, but that usually ends up like a superficial collection of different visual elements. However, a “glass pane” lacks the interactivity crucial for in-depth exploration and discovery.

Fragmented domain expertise

Then there is the human element. An organization often has many teams, and each team may build and run multiple microservices. Each team member has their share of product domain knowledge, and each team often has their own set of expertise on the technology stack they use. On the other hand, observability cannot be limited by these organizational boundaries. SLOs may help clarify accountability, but they do not reduce the challenges of cross-boundary observability.

The Potential of Knowledge Graph

In a nutshell, a knowledge graph is a directed labeled graph in which the nodes and edges have well-defined meanings. Or, if we put it another way, it’s a graph that uses entities and relationships to encode semantic information about specific topics. There are two popular graph data models: RDF(Subject predicate Object) and Property Graphs. For example, a node hosting a pod is a minimal property graph, in which there are two types of entities with a set of properties. There is also a “HOSTS” relationship between the Node entity and the Pod entity.

So far, this sounds pretty plain, so why do we think the knowledge graph will help observability?

To be clear, we understand that a graph is not a suitable data structure for encoding time series data. Nor is it practical to represent raw logs or traces. However, if we can extract entities and relationships from these data and form a graph, we can leverage it for the benefit of observability.

- A graph can easily visualize a system topology that crosses organizational boundaries. The mental pictures of your best domain experts are now easily accessible to everyone in your organization.

- A graph can empower search with context, making it easy to explore and navigate all the software and hardware components in a cloud-scale application.

- A graph is a good choice for schema-free data integration. We can define entities on the fly without any schema. These entities then serve as an abstraction layer upon which we can answer semantic queries that require combining data from multiple sources.

- A graph provides spatial correlation, which supplements the temporal correlation and reduces the human cognitive load for troubleshooting.

If graphs are so helpful, why have they not been utilized much?

For traditional solutions with fixed entity schemas, graph usage is limited to visualizing what their users already know. Such a graph is not an interactive tool for observability. Now the open-source ecosystem is thriving. We should embrace a flexible entity model that can take advantage of an open world.

Solutions like Prometheus provide contextual information in the form of labels, but the focus is still on data collection and aggregation, not contextualization. With a knowledge graph, we can instead place contextualization at the center of observability. It’s intuitive, easy to explore, and enables automatic correlation. It can also tie together many other observability tasks like dashboarding and troubleshooting in a fluent user experience.

How Asserts Utilizes Knowledge Graph

Asserts is a metric intelligence and observability platform. We extensively utilize a knowledge graph to help our users get to the bottom of application and infrastructure problems faster. This section will explain how Asserts materializes the knowledge graph's previously mentioned benefits.

Building the graph

Creating a knowledge graph at scale can be a little challenging. Wikidata was mainly curated by human volunteers, and Google's knowledge base started with a community-built Freebase. LinkedIn's knowledge graph used machine learning to extract entities from user-generated content. Observability knowledge graph does not need such scale initially but needs to be curated and maintained automatically.

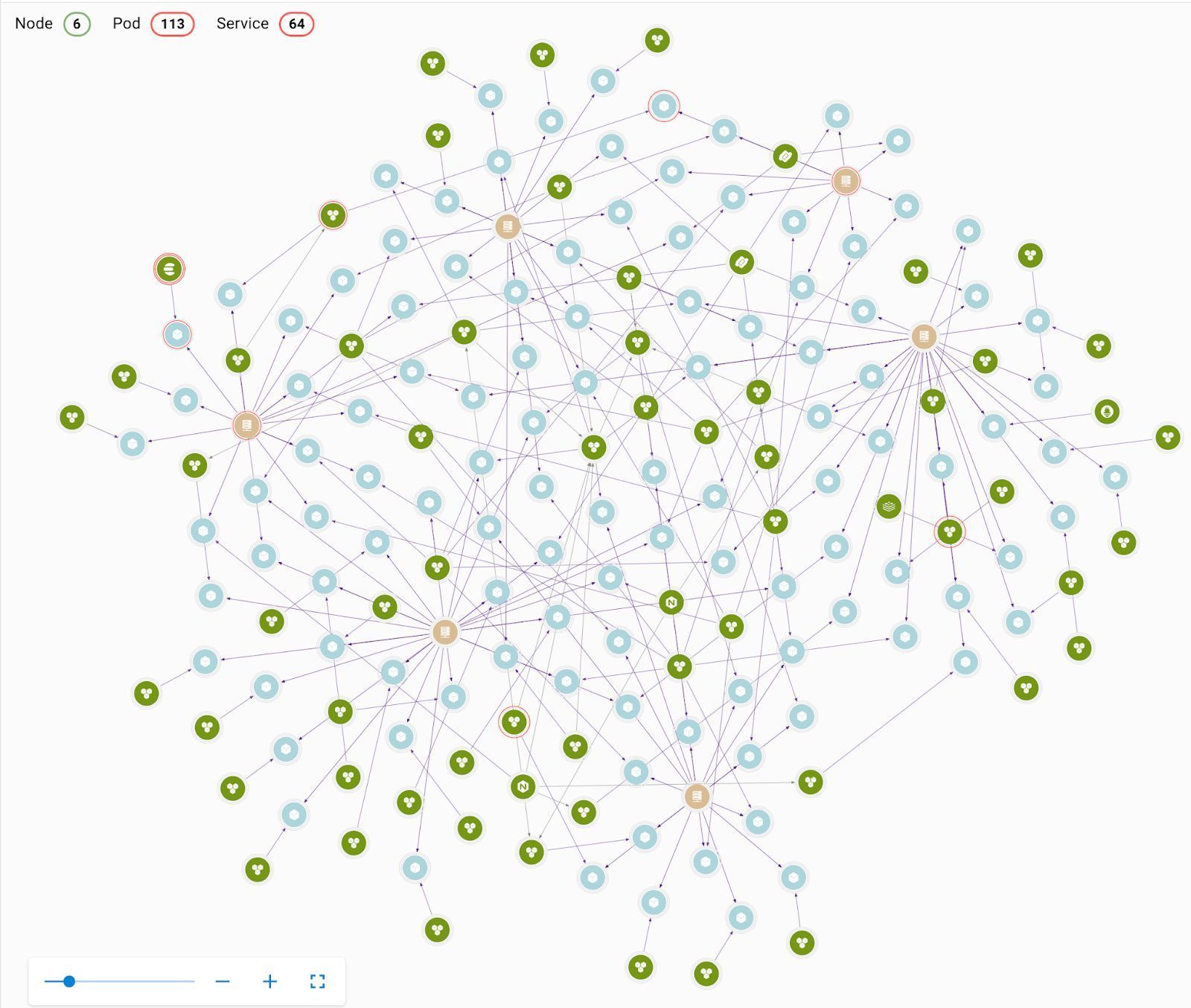

Asserts chose to deal with time-series data first to start from a well-understood scope. More specifically, because Prometheus metric format uses labels to encode contextual information, Asserts can automatically discover entities in a system and their relationships from the metrics stream. These entities and relationships are intuitive for our users to understand. We have infrastructure entities like Nodes, Pods, Auto Scaling Groups, and application entities like Services, Topics, etc. For relationships, if we connect the dots from multiple time series, we can infer that a Node HOSTS a Pod and a Services CONTROLS a Pod.

As metrics keep flowing in, Asserts continuously keeps the graph up-to-date. At the same time, Asserts also records the life cycle of each entity, so the graph also keeps track of the history of the underlying application.

Searching a graph

Even for a few relatively simple applications like Asserts has, navigating the graph can be a daunting task.

Asserts thus provides search expressions as the starting point for exploring the graph. These search expressions are templates to accommodate the schemaless nature of the graph. As we discover more and more entity types, these search expressions automatically include them. For instance, our most basic search expression, “Show all [entity_type]” automatically covers all the new entity types as the graph grows.

We can also combine a text search with graph traversal. The following search looks for a node with its name containing “ip-10-0-86-240” and services like “auth” with pods hosted by this node. At the same time, we also show all the related services and the namespace they belong to. Users can save these searches as entity grouping for future correlation use, and they can add a group to the Workbench with a single click when troubleshooting.

When a search runs with graph traversal, the actual entities and relationships returned are dynamic. They represent the components and their coupling at run-time, not a static structure from pre-existing knowledge. If someone modifies the code to cause changes in service dependency, whether by design or by accident, these searches will return the changes, thus making them visible to everyone on your team.

Curated knowledge as assertions

In addition to extracting entities/relationships, Asserts also automatically monitors these metrics and surfaces key insights as Assertions. We then feed these assertions back to the graph as curated knowledge. Now we can imitate semantic queries that combine data from two data sources: the customer's original time-series data and our domain knowledge.

We’ve built a whole library of Assertions, so our users do not need to be experts on every component in their technology stack. This curated knowledge enriches each customer’s knowledge graph automatically.

Spatial correlation

A big part of observability is troubleshooting. A big part of troubleshooting is the temporal correlation, i.e., we put a few related things together, lay their performance metrics side-by-side in the same time window, and try to figure out what’s happened. Of course, what to look at usually relies on human knowledge, and the engineer responsible for the troubleshooting often has a mental picture of how things fit together.

Again, observability is about things we may not already know. A knowledge graph thus is a great supplement to provide spatial context for troubleshooting.

Furthermore, Asserts can make automatic spatial correlations to aid troubleshooting. For instance, we propagate assertions from ephemeral entities like Pods to their long-lasting parent entities like Nodes. If we add the node in the previous screenshot to Asserts Workbench, assertions on any of its underlying pods are also laid out in the Workbench. In this case, it's pretty easy to see that a memory saturation on one of its pods precedes the pod's crash, and the node has retained both assertions.

In a way, Assertion propagations are similar to the metric aggregation in a traditional hierarchical metric model, but it’s much more flexible and relevant.

Further Thoughts

While this blog briefly explains our current state with the observability knowledge graph, we probably just scratched the surface. If we think further, we can leverage the graph more if we run inference algorithms to surface more interesting insights. Besides metrics, it's also possible to extract entities and relations from text, which suggests that integrating logs and traces to the knowledge graph is also plausible.

If you are interested in our journey, feel free to try our sandbox and follow our blog.